“Evidence doesn’t lie” - Gil Grissom, CSI

Ten years ago police were on the hunt for an unusual serial killer. There were several factors that made this suspect unique. Firstly; she was female, a rarity amongst serial killers. Secondly; there seemed to be no pattern to her crimes. Her DNA was found at crime scenes in France, Germany and Austria dating back to 1993. On a cup at the scene of the murder of a 62 year old woman. A knife at the house of a murdered 61 year old man. A syringe containing heroin. Altogether she was linked to forty separate crimes including six murders. Her accomplices included Slovaks, Iraqis, Serbs, Romanians and Albanians. This was an unprecedented case. A modern day Moriarty. She was called ‘The Phantom of Heilbronn’ or ‘The Woman Without a Face’.

Then in 2009 the police found her. After a case lasting eight years, 16,000 man hours and a cost of €2 million the police had their suspect. She was a technician working at the factory which made the cotton swabs the forensics team used to collect samples. As she had gone about her work moving and speaking her saliva and skin had got on the swabs and contaminated them. Police confirmed that every sample of the Phantom’s DNA had been collected with swabs from the same factory. The Phantom of Heilbronn did not exist.

If you think about it, it was incredibly unlikely that one woman was involved in so many different crimes across so many countries over so many years. It actually makes much more sense that it was error. And yet the investigators were blinded by the result in black and white on a screen.

This can happen in Medicine. A result from a blood test or imaging comes back positive or negative and we just accept it. We have use our brains and think about the tests we’re ordering and what the results mean.

Sensitivity

If you have a certain disease we want a test that will detect if you have it and come back positive. That is a test’s sensitivity. We don’t want false negatives: people with a disease not testing positive. A sensitivity of 100% means that the test will always come back positive if you have the disease. A sensitivity of 50% means that the test will correctly detect disease in 50% of patients with the disease. The other 50% get a false negative. Sensitivity is very important if you’re testing for a serious disease. For example, if you’re testing for cancer you don’t want many false negatives.

Specificity

As well as detecting disease you also want the test to accurately rule out a disease if the patient doesn’t have it. This is its specificity. We don’t want false positives: people who don’t have the disease testing positive. A specificity of 100% means that the test will always come back negative if you don’t have the disease. A specificity of 50% means that 50% of people who don’t have a disease will correctly test negative. The other 50% will be given a false positive result. Specificity is very important if there’s a potentially hazardous treatment or further investigation following a positive result. If a positive result means your patient has to undergo a surgical procedure or be exposed to radiation by a CT scan you’re going to want as few false positives as possible.

The trouble is that no test is 100% sensitive or 100% specific. This has to be understood. No result can be interpreted properly without understanding the clinical context.

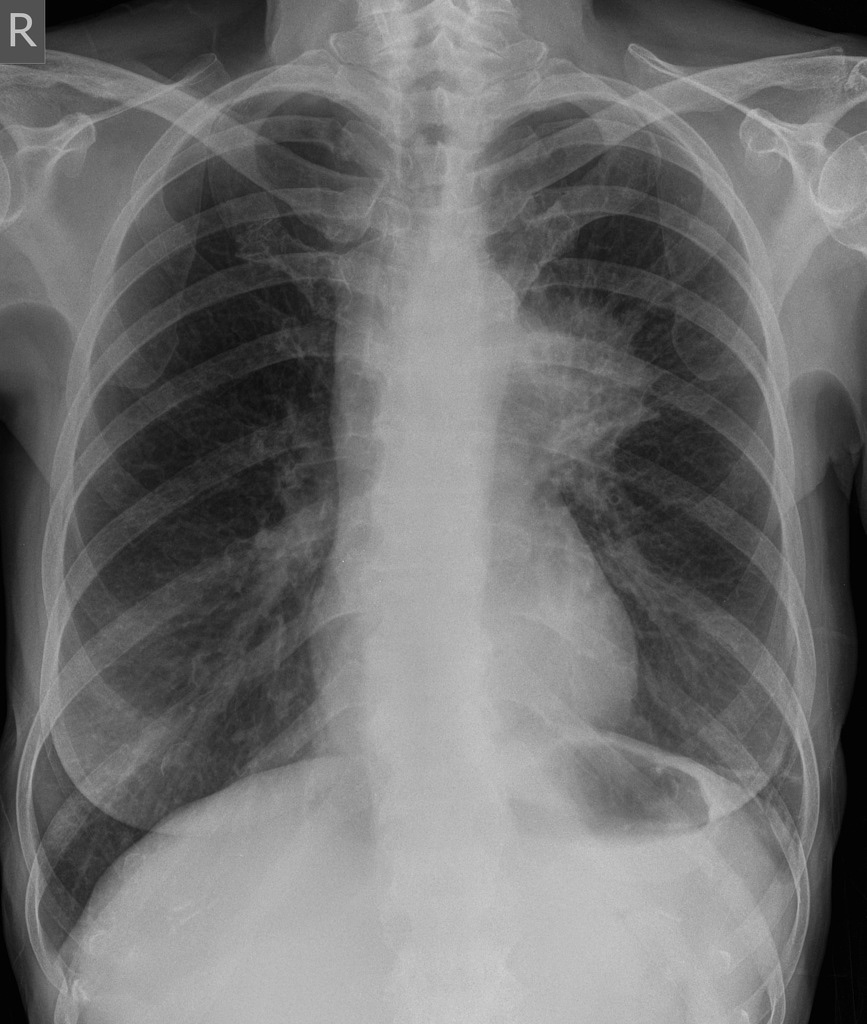

For example, the sensitivity of a chest x-ray for picking up lung cancer is about 75%. That means it gives a true positive for 3 out of 4 patients with the other patient getting a false negative. If your patient is in their twenties, a non-smoker with no family history and no symptoms other than a cough you’d probably accept that 1/4 chance of a false negative and be happy you’ve ruled out a malignancy unless the situation changes. However, in a patient in their seventies with a smoking history of over 50 years who’s coughing up blood and had unexplained weight loss suddenly that 75% chance of detecting cancer on a chest x-ray doesn’t sound so comforting. Even if you can’t see a mass on their chest x-ray you’d still refer them for more sensitive imaging. That’s because the second patient has a much higher probability of having lung cancer based on their history. So high in fact that choosing a test with such poor sensitivity as a chest x-ray might not be the right decision to make. This is where pre-test probability comes in.

Pre-test probability

This principle of understanding the clinical context is called the pre-test probability. Basically it is the likelihood the individual patient in front of you has a particular condition before you’ve even done the test for that condition.

The probability of the condition or target disorder, usually abbreviated P(D+), can be calculated as the proportion of patients with the target disorder, out of all the patients with the symptoms(s), both those with and without the disorder:

P(D+) = D+ / (D+ + D-)

(where D+ indicates the number of patients with target disorder, D- indicates the number of patients without target disorder, and P(D+) is the probability of the target disorder.)

Pre-test probability depends on the circumstances at that time. For example, the pre-test probability of a particular patient attending their GP with a headache having a brain tumour is 0.09%. Absolutely tiny. However, with every re-attendance with the same symptom or developing new symptoms or even then attending an Emergency Department, that pre-test probability goes up.

Pre-test probability helps us interpret results. It also helps us pick the right test to do in the first place.

Pulmonary embolism: a difficult diagnosis

Pulmonary embolism (blood clot on the lung) affects people of all ages, killing up to 15% of patients hospitalised with a PE. This is reduced by 20% if the condition is identified and treated correctly with anticoagulation. PE doesn’t play fair though and has very non-specific symptoms such as shortness of breath or chest pain. The gold standard for detecting or ruling out a PE is with a computerised tomography pulmonary angiogram (CTPA) scan. However, a CTPA scan involves exposing the chest and breasts to a lot of radiation. For instance, a 35 year old woman who has one CTPA scan has her overall risk of breast cancer increased by 14%. There’s also the logistical impossibility of scanning every patient we have. So we need a way of ensuring we don’t scan needlessly.

We do have a blood test, checking for D-Dimers which are the products of the body’s attempts to break down a clot. The trouble is other conditions such as infection or cancer can increase our D-Dimer as well. The D-Dimer test has a sensitivity of 95% and a specificity of 60%. That means that it will fail to detect PE in 5% of patients meaning we miss a potentially fatal disease in 1/20 patients with a PE. It also means it will fail to rule out PE in 40% of patients and so risk exposing patients without a PE to a scan which increases their risk of cancer. Not to mention starting anticoagulation treatment (and so increasing risk of bleeding such as as a brain haemorrhage) needlessly. So we have to be careful to only do the D-Dimer test in the right patients. This is why we need to work out our patient’s risk.

Luckily there is a risk score for PE called the Well’s Score. This uses signs, symptoms, the patient’s history and clinical suspicion and can stratify the patient as low or high risk for a PE. We then know the chances of whether the patient will turn out to have a PE based on whether they are low or high risk.

Only 12.1% of low risk patients will have a PE. At such a low chance of PE we accept the D-Dimer’s 5% probability of a false negative and are keen to avoid the radiation exposure of a scan and so do the blood test. If it is negative we accept that and consider PE ruled out unless the facts change. If it is positive we can proceed to imaging.

However, 37.1% of high risk patients will have a PE. Now it’s a different ballgame. The pre-test probability has changed. A high risk patient has a more than 1/3 chance of having a PE. Suddenly the 95% sensitivity of a D-Dimer doesn’t seem enough knowing there’s a 1/20 chance of missing a potentially fatal diagnosis. The patient is likely to deem the scan worth the radiation risk knowing they’re high risk. So in these patients we don’t do the D-Dimer. We go straight to imaging. If a D-Dimer has been done for some reason and is negative we ignore it and go to scan. We interpret the evidence based on circumstances and probability.

This is basis of the NICE guidance for suspected pulmonary embolism.

Grissom is wrong; the evidence can lie. Some of the results we get will be phantoms. Not only must we pick the right test we must also think: will I accept the result I might get?

Thanks for reading.

- Jamie