The United Kingdom is in the throes of a pandemic. A new virus without cure or vaccine kills with frightening speed. The Prime Minister is struck down with fever. His life hangs in the balance. It is September 1918. The Prime Minister is David Lloyd George. History may not repeat but she does love to rhyme.

15th September 1918. David Lloyd George, Prime Minister and leader of the wartime coalition government, although not the leader of his party the Liberals, visits Manchester to receive the freedom of the city. It is the last few months of the bloodiest conflict known to man. By the end of the month the German High Command would telegram the Kaiser that victory was impossible. Peace would soon be in sight. However, far more people worldwide would lose their lives to a different, invisible enemy.

Lloyd George receiving the Freedom of Manchester Photo: Illustrated London News [London, England] 15 September 191721 September 1918

H1N1 influenza may well have been circulating in military camps for a while before 1918; the confines and poor hygiene were perfect for viruses to spread and mutate. There is much debate as to where the virus first appeared but given wartime censorship it was credited neutral Spain whose open reporting gave it the impression of being the disease’s epicentre. It would go to infect a third of the world’s population, killing at least 50 million people; more than double the deaths in World War One.

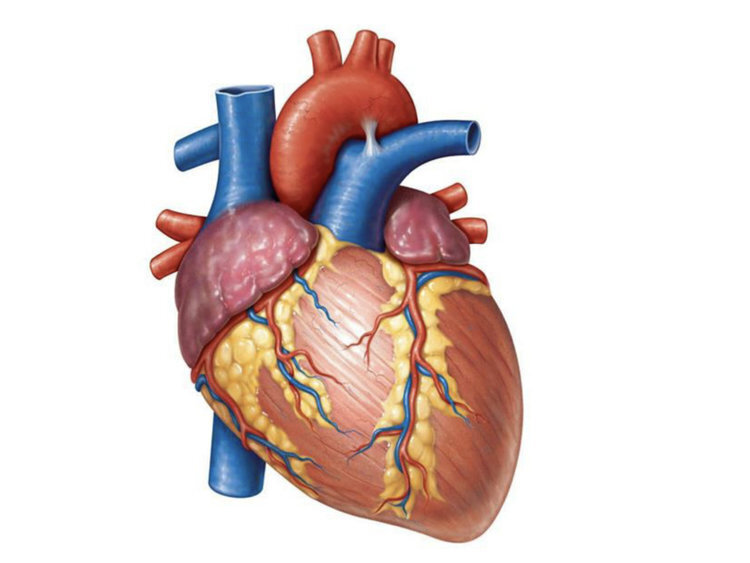

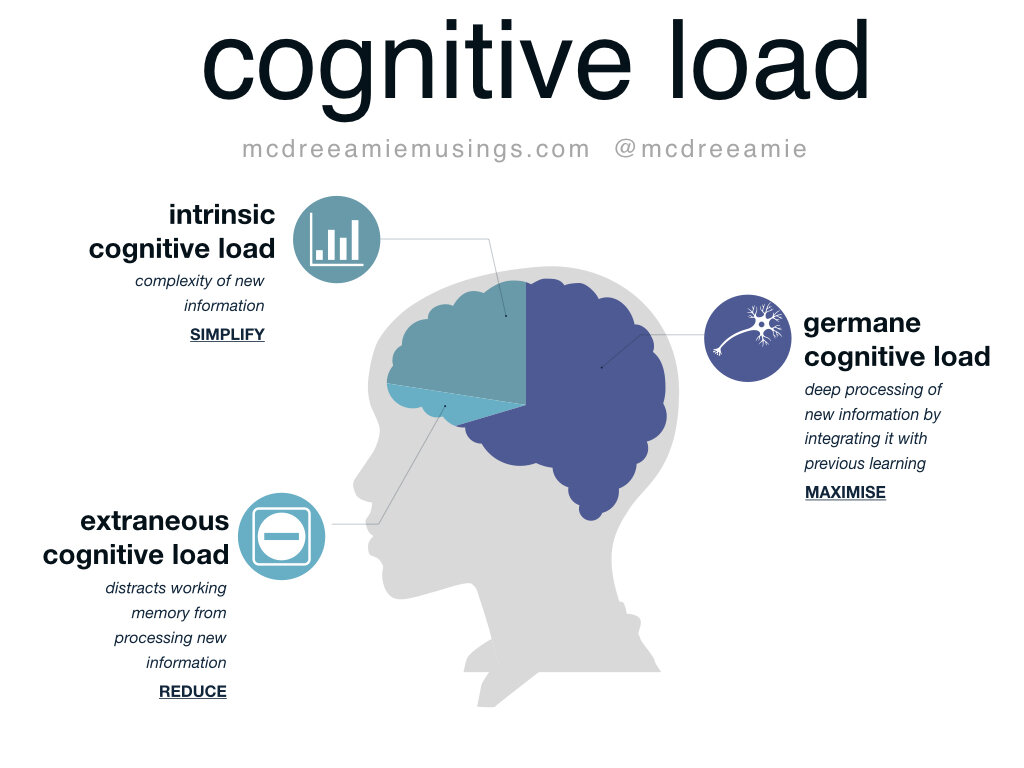

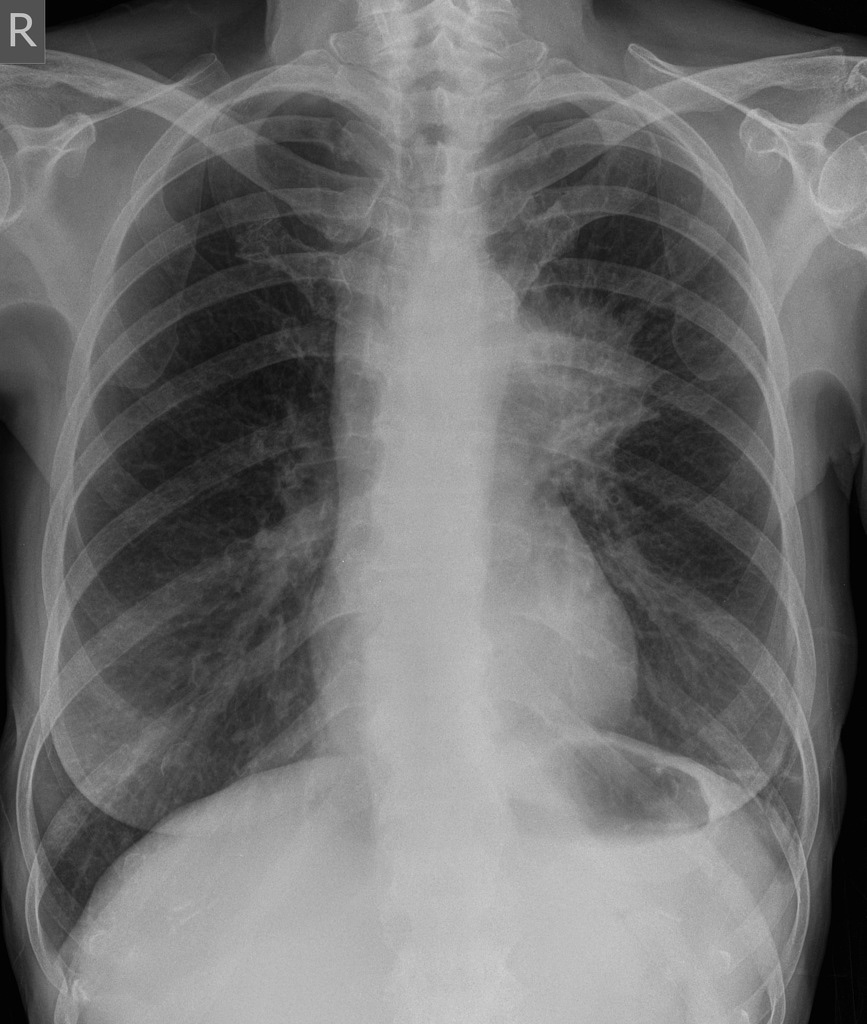

Unusually for ‘flu the victims were not children or the elderly but young to middle-aged adults. There’s a number of theories for this; whether their stronger immune systems actually turned against them and made the disease worse or if those old enough to have lived through the 1889–1890 ‘Russian ‘flu’ pandemic had retained some form of immunity. Whatever the reason those who succumbed rapidly developed pneumonia. As their lungs failed to supply their face and extremities with oxygen they would go blue with hypoxia. This harbinger of death was given the name ‘heliotropic cyanosis’ after the flower whose colour patients were said to resemble.

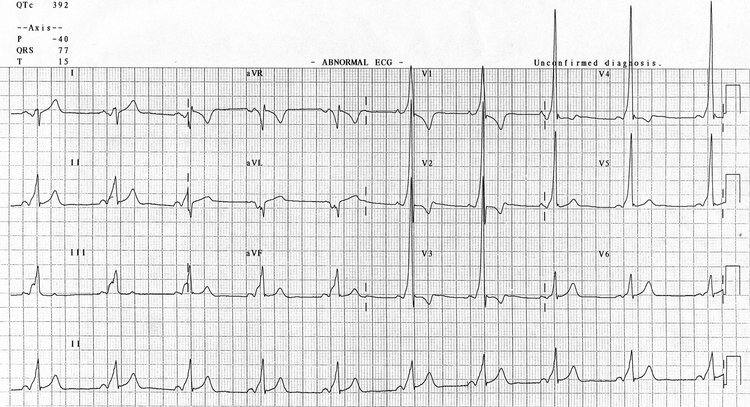

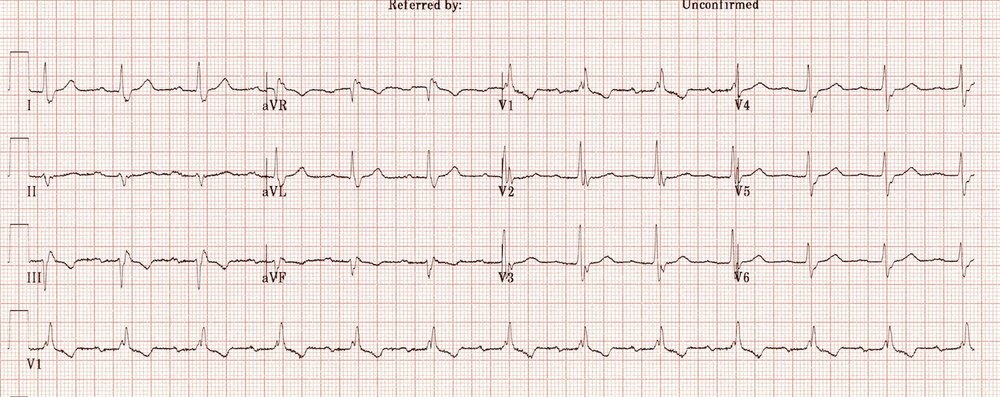

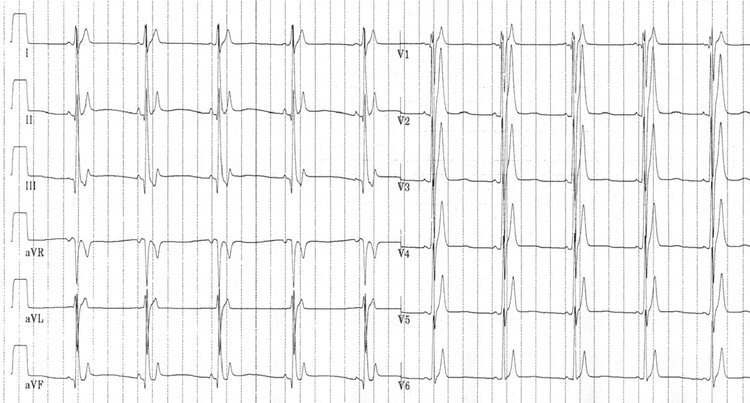

A plate from Clinical Features of the Influenza Epidemic of 1918–19 by Herbert French

And so to Albert Square, Manchester. David Lloyd George receives the keys to the city. The weather is appalling. Pouring with rain, the Prime Minister is soaked during the lengthy ceremony. He is met by dignitaries and well-wishers, shaking hands and exchanging pleasantries. By the end of the day he is hit by ‘a chill’. Although underplayed this chill renders him unable to leave Manchester Town Hall. A hospital bed is installed for Lloyd George. His personal physician visits him daily. It is eleven days before the Prime Minister is well enough to leave his bed wearing a respirator to both protect his stricken lungs and to prevent infection of others.

Manchester itself was to be an innovator in its response to the ‘flu pandemic. At the time there was no centralised Ministry of Health and so Public Health was a matter for local authorities under the auspices of Medical Officers. The Medical Officer for Manchester since 1894 was James Niven, a Scottish physician and statistician. With total war still ongoing Sir Arthur Newsholme, a senior health advisor to the British government, advised that even with ‘flu spreading munitions factories had to remain open and troop movements could not be interrupted. It was up to Medical Officers to think autonomously. Niven looked back at the pandemic of 1889–90 and noted that unlike seasonal ‘flu which strikes annually, pandemic ‘flu came in waves with each wave often more virulent than before. He argues:

“public health authorities should press for further precautions in the presence of a severe outbreak”

James Niven, Creative Commons

After the first cases of influenza were seen in Manchester in spring 1918 Niven therefore worked to prepare the city for the next wave that he predicted would hit later that year. Manchester was a densely packed working class city, a perfect breeding ground for disease. He closed schools and public areas such as cinemas. Areas which couldn’t be closed were disinfected. He studied statistics to be published on posters throughout the city to give people as much information as possible. He became a regular columnist in the Manchester Guardian advising readers on the symptoms of the disease. He advised that anyone showing symptoms must,

“on no account join assemblages of people for at least 10 days after the beginning of the attack, and in severe cases they should remain away from work for at least three weeks”

Manchester’s ‘flu outbreak would peak on 30th November 1918. Niven reflected that it might have occurred sooner without Armistice celebrations where he was powerless to prevent people congregating on the streets. Niven would remain in post until 1922. As well as his work fighting influenza he also led slum clearance, sanitation installation and improving air quality. Despite Manchester’s population increasing from 517,000 to 770,000 during his tenure the death rate per 1,000 population fell from 24.26 to 13.82. Despite his success in retirement he would be struck by depression. In 1925 he took poison and drowned himself in the sea off the Isle of Man.

Lloyd George would make a full recovery from his illness. He led the country’s Armistice celebrations and remained as Prime Minister until 1922 through the support of his Conservative coalition partners. His struggles for the leadership of the Liberals with his long time rival Herbert Asquith would dominate the party for at least the next decade and see them fall from government to third place in British politics. They would never return. Lloyd George remains the last Liberal Prime Minister in the United Kingdom. He would live to see his hard fought peace shatter and very nearly saw it return again, dying in March 1945.

History doesn’t repeat but she does rhyme. It is human nature to look for patterns and reason comparing the present to what has gone before. For Lloyd George stricken with influenza see Boris Johnson admitted to intensive care with COVID-19. For James Niven see Chris Whitty. However, our knowledge of disease, access to sanitation and healthcare progression is without equal in history. The H1N1 influenza virus behind the pandemic of 1918–19 would not be genetically sequenced until 1999. When COVID-19 first emerged in late December 2019 its genetic sequence was identified within a month. Intensive care and ventilation weren’t even figments of the imagination for the patient in 1918, Prime Minister or not. However, until a cure or vaccine for COVID-19 are realised our best weapon against it remains the advice of James Niven from over a century ago. From a time before social media or hashtags. Stay home.